Bluetek IT Solutions Blog

'Yeah, We’re Spooked’: AI Starting To Have Big Real-World Impact

A scientist who wrote a leading textbook on artificial intelligence has said experts are “spooked” by their own success in the field, comparing the advance of AI to the development of the atom bomb.

Prof Stuart Russell, the founder of the Center for Human-Compatible Artificial Intelligence at the University of California, Berkeley, said most experts believed that machines more intelligent than humans would be developed this century, and he called for international treaties to regulate the development of the technology.

“The AI community has not yet adjusted to the fact that we are now starting to have a really big impact in the real world,” he told the Guardian. “That simply wasn’t the case for most of the history of the field – we were just in the lab, developing things, trying to get stuff to work, mostly failing to get stuff to work. So the question of real-world impact was just not germane at all. And we have to grow up very quickly to catch up.”

Artificial intelligence underpins many aspects of modern life, from search engines to banking, and advances in image recognition and machine translation are among the key developments in recent years.

Russell – who in 1995 co-authored the seminal book Artificial Intelligence: A Modern Approach, and who will be giving this year’s BBC Reith lectures entitled “Living with Artificial Intelligence”, which begin on Monday – says urgent work is needed to make sure humans remain in control as superintelligent AI is developed.

“AI has been designed with one particular methodology and sort of general approach. And we’re not careful enough to use that kind of system in complicated real-world settings,” he said.

For example, asking AI to cure cancer as quickly as possible could be dangerous. “It would probably find ways of inducing tumours in the whole human population, so that it could run millions of experiments in parallel, using all of us as guinea pigs,” said Russell. “And that’s because that’s the solution to the objective we gave it; we just forgot to specify that you can’t use humans as guinea pigs and you can’t use up the whole GDP of the world to run your experiments and you can’t do this and you can’t do that.”

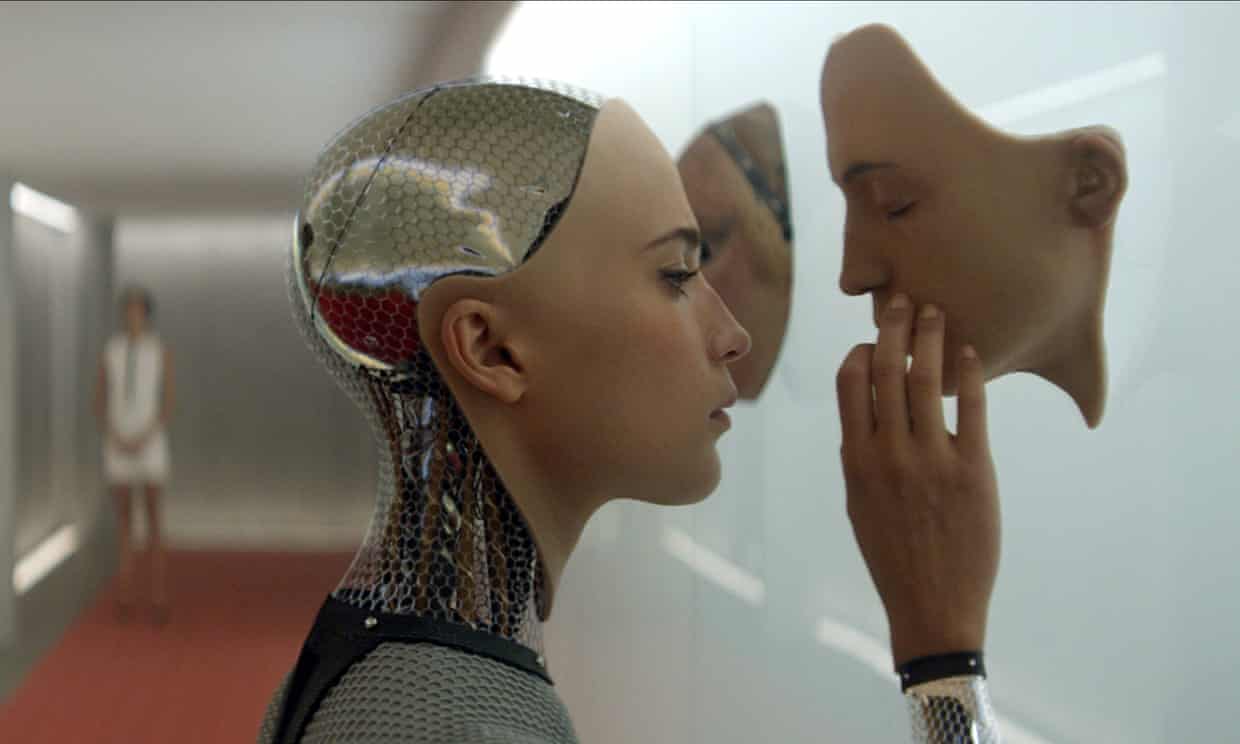

Russell said there was still a big gap between the AI of today and that depicted in films such as Ex Machina, but a future with machines that are more intelligent than humans was on the cards.

“I think numbers range from 10 years for the most optimistic to a few hundred years,” said Russell. “But almost all AI researchers would say it’s going to happen in this century.”

One concern is that a machine would not need to be more intelligent than humans in all things to pose a serious risk. “It’s something that’s unfolding now,” he said. “If you look at social media and the algorithms that choose what people read and watch, they have a huge amount of control over our cognitive input.”

The upshot, he said, is that the algorithms manipulate the user, brainwashing them so that their behaviour becomes more predictable when it comes to what they chose to engage with, boosting click-based revenue.

Have AI researchers become spooked by their own success? “Yeah, I think we are increasingly spooked,” Russell said.

“It reminds me a little bit of what happened in physics where the physicists knew that atomic energy existed, they could measure the masses of different atoms, and they could figure out how much energy could be released if you could do the conversion between different types of atoms,” he said, noting that the experts always stressed the idea was theoretical. “And then it happened and they weren’t ready for it.”

The use of AI in military applications – such as small anti-personnel weapons – is of particular concern, he said. “Those are the ones that are very easily scalable, meaning you could put a million of them in a single truck and you could open the back and off they go and wipe out a whole city,” said Russell.

Russell believes the future for AI lies in developing machines that know the true objective is uncertain, as are our preferences, meaning they must check in with humans – rather like a butler – on any decision. But the idea is complex, not least because different people have different – and sometimes conflicting – preferences, and those preferences are not fixed.

Russell called for measures including a code of conduct for researchers, legislation and treaties to ensure the safety of AI systems in use, and training of researchers to ensure AI is not susceptible to problems such as racial bias. He said EU legislation that would ban impersonation of humans by machines should be adopted around the world.

Russell said he hoped the Reith lectures would emphasise that there is a choice about what the future holds. “It’s really important for the public to be involved in those choices, because it’s the public who will benefit or not,” he said.

But there was another message, too. “Progress in AI is something that will take a while to happen, but it doesn’t make it science fiction,” he said.

Comments